Introduction

Long Short Term Memory (LSTM) is a type of artificial neural network widely used in the field of deep learning.LSTM was built to avoiding vanishing/exploding gradient problem of RNN. However, LSTM networks can still suffer from the exploding gradient problem. Due to special memory capability, LSTM generally use for time series forecasting. In this article, I would like to talk about LSTM architecture and building the architecture with PyTorch.

Architecture

First of all, let’s talk about the architecture of LSTM.

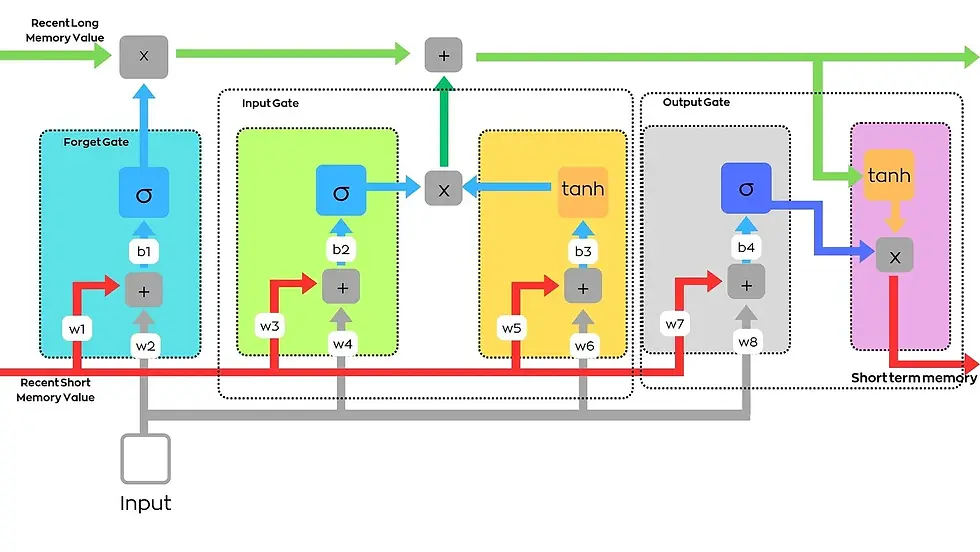

In the LSTM, there are two types of memory. One of this long-term memory that shown as a green line on the figure. The red line shows short-term memory. Also, LSTM has input, output and forget gate.

Calculation

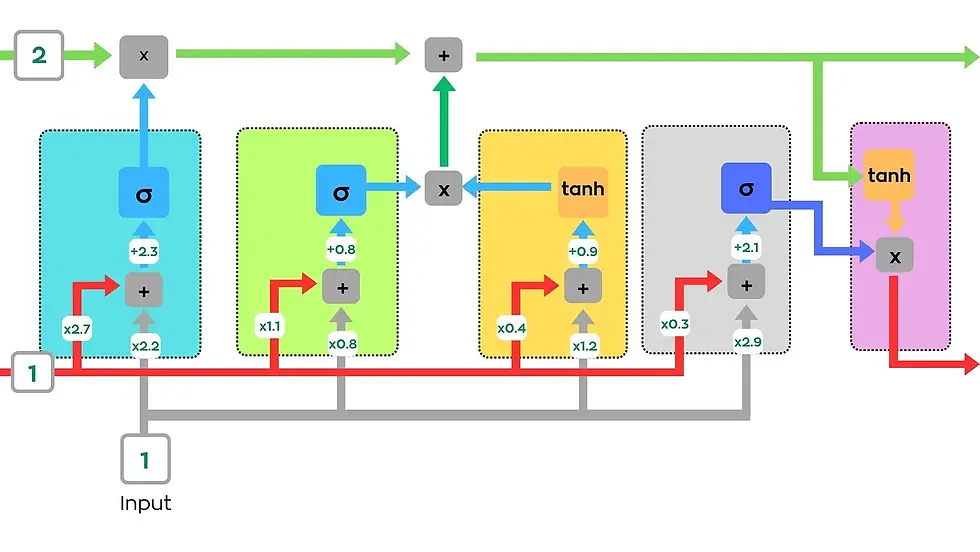

We assume that the recent long term value is 2, short term value is 1 and input is 1. Also, i wrote the initial weights and biases.

Lets calculate the output.

Forget Gate

(1 x 2.2) + (1 x 2.7) = 4.9

4.9 + 2.3 = 7.2

sigmoid(7.2) = 1

Remember that;

Our forget gate output is 1. We multiply this with 2. Our first long term memory value is 2.

Input Gate

Green cell

(1 x 1.1) + (1 x 0.8) = 1.9

1.9 + 0.8 = 2.7

sigmoid(2.7) = 0.937

Yellow cell

(1 x 0.4) + (1 x 1.2) = 1.6

1.6 + 0.9 = 2.5

tanh(2.5) = 0.987

Remember that;

We multiply those two cell and add to long term memory.

2 + 0.987 = 2.987

Output Gate

Gray cell

(1 x 0.3) + (1 x 2.9) = 3.2

3.2 + 2.1 = 5.3

sigmoid(5.3) = 0.995

Pink cell

tanh(2.987) = 0.995

We multiply this value with green cell output.

0.995 x 0.995 = 0.9899

Finally, our new short memory value is 0.9899. This calculation continues with new input values. And then, again and again.

This is the forward propagation. When the algorithm calculates the last output, backpropagation does its job. The mission of the backpropagation is to decrease the loss.

Building with hands

Libraries

First of all, we have to import necessary libraries. Lightning helps us to create architecture.

import torchimport torch.nn as nnimport torch.nn.functional as Ffrom torch.optim import Adamimport lightning as LWeight and biases

Now, we have to place weights and biases. Before that, i define a class that contains the lighting module.

class SimpleLSTM(L.LightningModule):

def __init__(self):

super().__init__()

mean = torch.tensor(0.0)

std = torch.tensor(1.0)

# Blue cell weights and biases

self.wbc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wbc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.bbc1 = nn.Parameter(torch.tensor(0.),requires_grad=True)

# Green cell weights and biases

self.wgc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wgc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.bgc1 = nn.Parameter(torch.tensor(0.),requires_grad=True)

# Yellow cell weights and biases

self.wyc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wyc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.byc1 = nn.Parameter(torch.tensor(0.),requires_grad=True)

# Gray cell weights and biases

self.wpc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wpc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.bpc1 = nn.Parameter(torch.tensor(0.),requires_grad=True)“requires_grad = True” attribute tells us that while doing backpropagation, values change according to loss. However, I placed the weights and biases like we as do it on the example.

class SimpleLSTM(L.LightningModule):

def __init__(self):

super().__init__()

self.wbc1 = 2.7

self.wbc2 = 2.2

self.bbc1 = 2.3

self.wgc1 = 1.1

self.wgc2 = 0.8

self.bgc1 = 0.8

self.wyc1 = 0.4

self.wyc2 = 1.2

self.byc1 = 0.9

self.wgrc1 = 0.3

self.wgrc2 = 2.9

self.bgrc1 = 2.1Cells

I defined input_value, long_memory and short_memory. Then, I connected all cells according to the figure.

def lstm_cells(self, input_value, long_memory, short_memory):

blue_cell = torch.sigmoid((short_memory * self.wbc1) +

(input_value * self.wbc2) +

self.bbc1)

green_cell = torch.sigmoid((short_memory * self.wgc1) +

(input_value * self.wgc2)+

self.bbc1)

yellow_cell= torch.tanh((short_memory * self.wyc1) +

(input_value * self.wyc2) + self.byc1)

updated_long_memory = ((long_memory * blue_cell) +

(green_cell * yellow_cell))

gray_cell= torch.sigmoid((short_memory * self.wgrc) +

(input_value * self.wgrc2) + self.bgrc1)

updated_short_memory = torch.tanh(updated_long_memory) * gray_cell

return ([updated_long_memory,updated_short_memory])Forward Propagation

I chose the long memory values as 2, short memory as 1.

def forward(self, input):

long_memory = 2

short_memory = 1

value = input[0]

long_memory, short_memory = self.lstm_cells(value, long_memory, short_memory)

return short_memoryPrediction

I defined the model and predicted the value.

class SimpleLSTM(L.LightningModule):

def __init__(self):

super().__init__()

self.wlr1 = 2.7

self.wlr2 = 2.2

self.blr1 = 2.3

self.wpr1 = 1.1

self.wpr2 = 0.8

self.bpr1 = 0.8

self.wp1 = 0.4

self.wp2 = 1.2

self.bp1 = 0.9

self.wo1 = 0.3

self.wo2 = 2.9

self.bo1 = 2.1

def lstm_cells(self, input_value, long_memory, short_memory):

blue_cell = torch.sigmoid((short_memory * self.wbc1) +

(input_value * self.wbc2) +

self.bbc1)

green_cell = torch.sigmoid((short_memory * self.wgc1) +

(input_value * self.wgc2)+

self.bbc1)

yellow_cell= torch.tanh((short_memory * self.wyc1) +

(input_value * self.wyc2) + self.byc1)

updated_long_memory = ((long_memory * blue_cell) +

(green_cell * yellow_cell))

gray_cell= torch.sigmoid((short_memory * self.wgrc) +

(input_value * self.wgrc2) + self.bgrc1)

updated_short_memory = torch.tanh(updated_long_memory) * gray_cell

return ([updated_long_memory,updated_short_memory])

def forward(self, input):

long_memory = 2

short_memory = 1

value = input[0]

long_memory, short_memory = self.lstm_cells(value, long_memory, short_memory)

return short_memory

input = 1.

model = SimpleLSTM()

model(torch.tensor([input]).detach())

-OUTPUT-

Predict is

tensor(0.9893)It is observed that the result is the same as in the example above.

With back propagation

When backpropagation is used, the model looks like that.

We defined Adam as an optimizer.

The model keeps updating the weights and biases until it reaches optimum loss.

class SimpleLSTM(L.LightningModule):

def __init__(self):

super().__init__()

mean = torch.tensor(0.0)

std = torch.tensor(1.0)

zero = torch.tensor(0.)

# Blue cell weights and biases

self.wbc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wbc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.bbc1 = nn.Parameter(zero,requires_grad=True)

# Green cell weights and biases

self.wgc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wgc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.bgc1 = nn.Parameter(zero,requires_grad=True)

# Yellow cell weights and biases

self.wyc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wyc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.byc1 = nn.Parameter(zero,requires_grad=True)

# Gray cell weights and biases

self.wpc1 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.wpc2 = nn.Parameter(torch.normal(mean=mean,std=std),requires_grad=True)

self.bpc1 = nn.Parameter(zero,requires_grad=True)

def lstm_cells(self, input_value, long_memory, short_memory):

blue_cell = torch.sigmoid((short_memory * self.wbc1) +

(input_value * self.wbc2) +

self.bbc1)

green_cell = torch.sigmoid((short_memory * self.wgc1) +

(input_value * self.wgc2)+

self.bbc1)

yellow_cell= torch.tanh((short_memory * self.wyc1) +

(input_value * self.wyc2) + self.byc1)

updated_long_memory = ((long_memory * blue_cell) +

(green_cell * yellow_cell))

gray_cell= torch.sigmoid((short_memory * self.wgrc) +

(input_value * self.wgrc2) + self.bgrc1)

updated_short_memory = torch.tanh(updated_long_memory) * gray_cell

return ([updated_long_memory,updated_short_memory])

def forward(self, input):

long_memory = 0

short_memory = 0

value1 = input[0]

value2 = input[1]

value3 = input[2]

value4 = input[3]

long_memory, short_memory = self.lstm_cells(value1, long_memory, short_memory)

long_memory, short_memory = self.lstm_cells(value2, long_memory, short_memory)

long_memory, short_memory = self.lstm_cells(value3, long_memory, short_memory)

long_memory, short_memory = self.lstm_cells(value4, long_memory, short_memory)

return short_memory

def configure_optimizers(self):

return (Adam(self.parameters()))

def training_step(self, batch, batch_idx):

input_i, label_i = batch

output_i = self.forward(input_i[0])

loss = (output_i - label_i)**2

self.log("train_loss",loss)

if (label_i == 0):

self.log("out_0",output_i)

else:

self.log("out_1",output_i)

return lossWe talk about LSTM architecture and building this using PyTorch. I hope what I wrote was understandable.

Kommentare